Just spent my entire evening diving into Anthropic's new paper "Scaling Monosemanticity" and wow - this is some groundbreaking stuff. Let me break down why I'm so excited:

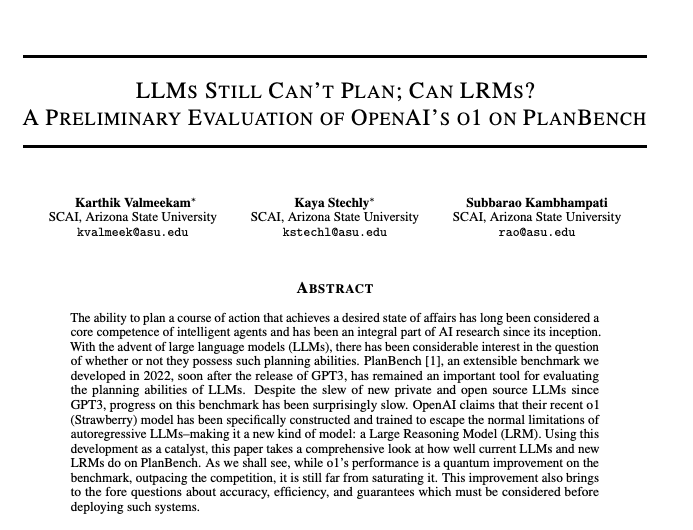

First, some background: These researchers basically managed to "decode" what's happening inside Claude 3 Sonnet (their mid-sized production model) using something called sparse autoencoders (SAEs). Instead of just seeing neurons firing randomly, they found millions of interpretable features that actually make sense to humans.

The coolest findings:

- They found features for EVERYTHING:

- Individual features for specific people (like Einstein, Feynman)

- Features that understand code bugs and security vulnerabilities

- Features that recognize landmarks (like the Golden Gate Bridge) in both text AND images

- Features that understand abstract concepts like "betrayal" or "internal conflict"

- What blew my mind is that these features actually WORK:

- They could make Claude believe correct code had bugs by activating the "code error" feature

- They could make it write scam emails by activating the "scam" feature

- They could make it act sycophantic by activating the "sycophancy" feature

- They even found features related to how the model thinks about itself as an AI!

- The scaling stuff is fascinating:

- They used three different sizes: 1M, 4M, and 34M features

- Found clear scaling laws (more compute = better features)

- Showed that concept frequency in training data predicts whether a feature will exist

But here's why this matters for AI safety (and why I'm kind of nervous):

- They found features related to deception, power-seeking, and manipulation

- Found features for dangerous knowledge (like making weapons)

- Discovered features related to bias and discrimination

- Uncovered how the model represents its own "AI identity"

The limitations are important though:

- This takes MASSIVE compute

- They probably haven't found all the features yet

- It's not clear if this will scale to even bigger models

- There are still challenges with features being spread across layers

Personal take: This feels like a huge step forward in actually understanding what's going on inside these models. Like, we're not just poking at a black box anymore - we can actually see the concepts the model is using! But it's also kind of scary to see just how much knowledge about potentially dangerous stuff is encoded in there.

TLDR: Anthropic managed to extract millions of interpretable features from Claude 3 Sonnet using sparse autoencoders. Found features for everything from code bugs to deception to self-representation. Both exciting and scary implications for AI safety.

https://transformer-circuits.pub/2024/scaling-monosemanticity/index.html