The Research paper that changed the world...

Anything happening in AI today can be traced back to this one brief moment in history...

Share your favourite papers.

GPT ftw :)

If you want more papers like these, drop a "+1" comment below. I will notify these people in DMs next time I upload a new paper.

Bahdanau, Cho, and Bengio published a pivotal paper that reshaped the landscape of artificial intelligence, particularly in NLP.

This is the first time the world was introduced to the attention mechanism, the most important thing in modern neural machine translation systems. Unlike traditional approaches that relied solely on fixed-length vector representations, the attention mechanism allowed models to dynamically focus on different parts of the input sequence during the translation process.

This breakthrough not only significantly improved the accuracy of translation but also enabled the handling of longer sentences with greater fluency.

Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio not only innovated in the field of machine translation forward but also laid the foundation for attention-based architectures across various domains of deep learning. Their innovative approach demonstrated the power of neural networks to tackle complex sequence-to-sequence tasks and opened doors to a new era of natural language understanding and generation.

Anything happening in AI today can be traced back to this one brief moment in history...

Share your favourite papers.

GPT ftw :)

Please give this post +1 like, I am getting demoralized when I see bad posts get 100s of likes but a good post like this gets no likes whatsoever. If I don't get more than 100 likes then I will stop posting research papers here.

Ian G...

xAI rival of open ai chatgpt and Google bard...Have hired ex-engineers of Google deepmind, open ai, Microsoft..What's your views?

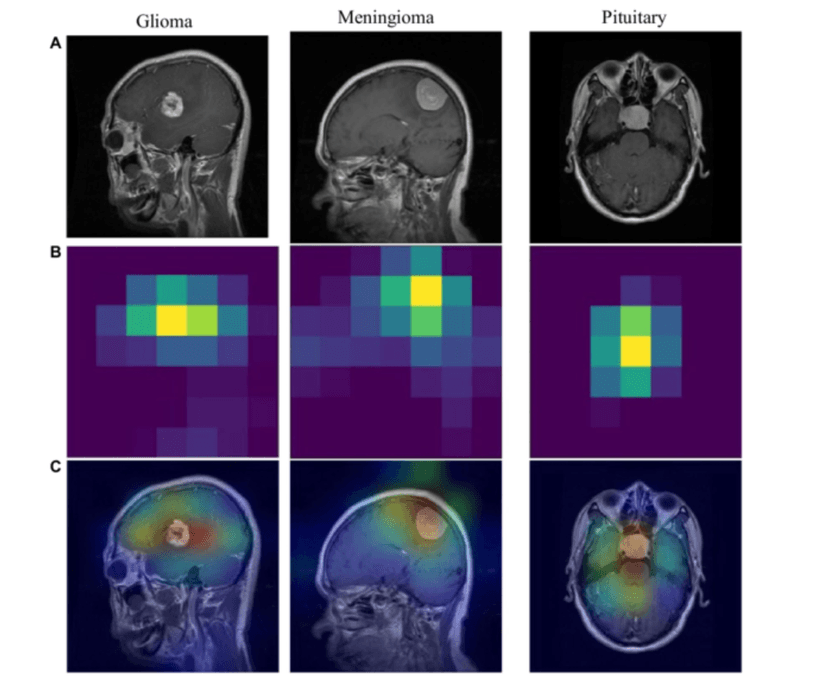

For me? It was GradCAM was a gamechanger at selling computer vision initiatives internally to the non-technical stakeholders.

The gradCAM function computes the importance map by taking the derivative of the reduction layer output for...

Can someone answer the question below. I was asked the question in a data scientist( 8YOE) interview?

Why large language models need multi-headed attention layer as appossed to having a single attention layer?

Follow up question- Duri...