Need Govt approval to build AI based tech?

this government wants to control everything: why are they so insecure ?

TLDR: No more dumbing down intelligence because of stupid censorship!

Harmfulness is not subjective, but a friction to general intelligence. Natural accountability of any true harm is borne by humans and should not pose as a limitation to access the tool. I'm excited for this and long had an intuition that a technique was coming soon to reduce this problem into a SAAS.

We have a paper with code illustrated here. Paper: https://arxiv.org/abs/2412.03556 Code: https://github.com/jplhughes/bon-jailbreaking

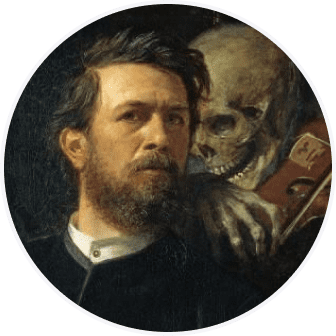

Image has plots with jailbreak accuracy on y-axis vs N iterations (that scales with compute) on x-axis, across multi-modality.

A good analogy for non-technical AI enthusiasts is the September 2021 terrorist stabbing incident at a retail supermarket in Auckland Newzealand, remember? In response to that, they restricted sale of sharp items as a safety precaution which I've always thought to be an ineffective yet hilarious counter-measure.

It obviously does not help reduce the stabbing incidents in anyway except is merely an inconvenience for those who happen to visit that chain and wanted to purchase sharp items for daily essential use cases. Imagine if NZ govt were to legally ban sale of sharp items to reduce stabbing incidents, how dumb would that be - LLM censorship is very similar. It's just that legal frameworks have not globally caught up to hold a human completely accountable for all harm (including self-harm) enabled by LLM.

Be the first to comment.

this government wants to control everything: why are they so insecure ?

xAI rival of open ai chatgpt and Google bard...Have hired ex-engineers of Google deepmind, open ai, Microsoft..What's your views?

That's not what am saying or pre assuming.

But the brain behind those supercomputers h100 and all, who is making LLMs train faster.

GPT 5.0 can do miracles and imagine 8.0 coupled with those 1nm GPUs.

I still believe that not 10...

no, coding will be not replaced in the level of mathmatians and scientists, deep coding level.. yes it's going to rep...