NVIDIA murders Moore's Law

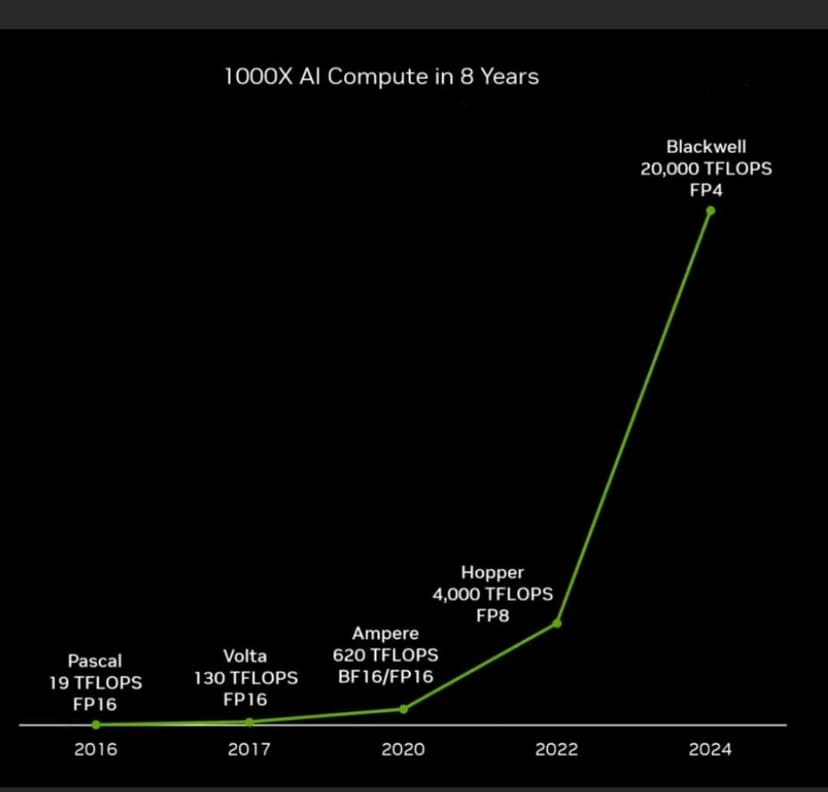

The race to superintelligence is fully on. Bad news for us humans - compute for superintelligence doesn't seem to be a problem.

Jensen Huang and NVIDIA will not follow any laws made by Moore anymore.

One interview, 1000+ job opportunities

Take a 10-min AI interview to qualify for numerous real jobs auto-matched to your profile 🔑

For those of us not as smart as you, this means...?

Total processing power would double every 2 years. So in a normal world, the graph should be a straight line not a hyperbolic or exponential curve. Hyperbolic or exponential means that right now it seems AI will have no known bounds on compute ie. no bounds on what problems it can solve (like training itself and improving intelligence).

Compute and energy were touted to be the two constraints on superintelligence. One of them we've destroyed completely (compute). We can now only hope somebody doesn't discover practical nuclear fusion or other mass energy harvesting techniques. Otherwise, superintelligence is just a matter of when, not if.

@AITookMyJob That first paragraph sums it up pretty well.

NVIDIA breaking Moore's Law? That's like Sachin Tendulkar breaking cricket records - inevitable! But remember, every AI needs a human touch. What's your take?

No matter what AI , BI you create, Cognitive ability is the gift of god and its impossible for humans to create until YOU CAN BE ABLE REVERSE ENGINEER HOW A BRAIN WORKS

Going by your logic, the cognitive ability of AI (that it already demonstrably has) is also the gift of god as we're using our god-gifted cognitive ability to create more cognitive ability for AI.

Btw, we don't need to 100% reverse engineer a brain to create cognition. We might get some useful insights, sure. But as of now, intelligence of AI keeps growing when we add more parameters to the model and use more / better training data. No real magic involved. Just brute force.

Murders moors law my ass. They comparing fp4 performance of newer generation to fp8 of previous generation while shopping two chips as one card. So 20k/2=10k. Since 2 chips it's 10k/2=5k. So marginal 20% improvement over H100. No one even uses fp4 so that's immaterial.

Moor's law is not working for gpus anymore because tsmc is not able to get the yields up for 3nm chips. The only new thing they did is package a lot of these into a single machine with nvlink and fool cuda into thinking that it's a single 30tb gpu. Of course how that performs is yet to be seen.

Also an obligatory fu to Nvidia for gimping their GeForce cards just so that they can sell the same shit at 10x price to data centers.

Nvidia : rom rom bhaiyo